wip intel

This commit is contained in:

1

.gitignore

vendored

1

.gitignore

vendored

@@ -6,6 +6,7 @@ tests/compile_commands.json

|

|||||||

|

|

||||||

.clangd/

|

.clangd/

|

||||||

.cache/

|

.cache/

|

||||||

|

.vscode/

|

||||||

|

|

||||||

*.o

|

*.o

|

||||||

gpu-screen-recorder

|

gpu-screen-recorder

|

||||||

|

|||||||

@@ -7,7 +7,6 @@ where only the last few seconds are saved.

|

|||||||

|

|

||||||

## Note

|

## Note

|

||||||

This software works only on x11.\

|

This software works only on x11.\

|

||||||

Recording a window doesn't work when using picom in glx mode. However it works in xrender mode or when recording the a monitor/screen (which uses NvFBC).\

|

|

||||||

If you are using a variable refresh rate monitor, then choose to record "screen-direct". This will allow variable refresh rate to work when recording fullscreen applications. Note that some applications such as mpv will not work in fullscreen mode. A fix is being developed for this.\

|

If you are using a variable refresh rate monitor, then choose to record "screen-direct". This will allow variable refresh rate to work when recording fullscreen applications. Note that some applications such as mpv will not work in fullscreen mode. A fix is being developed for this.\

|

||||||

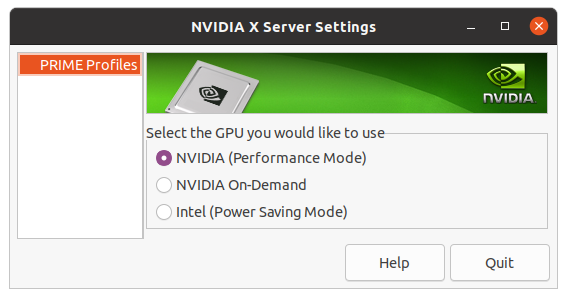

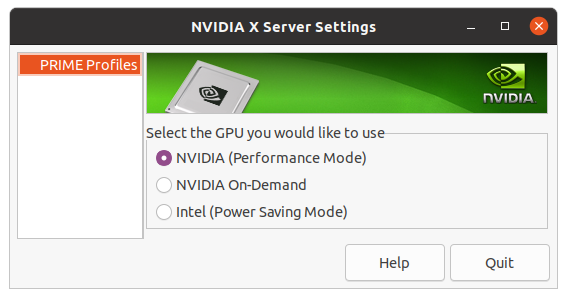

For screen capture to work with PRIME (laptops with a nvidia gpu), you must set the primary GPU to use your dedicated nvidia graphics card. You can do this by selecting "NVIDIA (Performance Mode) in nvidia settings:\

|

For screen capture to work with PRIME (laptops with a nvidia gpu), you must set the primary GPU to use your dedicated nvidia graphics card. You can do this by selecting "NVIDIA (Performance Mode) in nvidia settings:\

|

||||||

\

|

\

|

||||||

@@ -23,12 +22,12 @@ Using NvFBC (recording the monitor/screen) is not faster than not using NvFBC (r

|

|||||||

|

|

||||||

# Installation

|

# Installation

|

||||||

If you are running an Arch Linux based distro, then you can find gpu screen recorder on aur under the name gpu-screen-recorder-git (`yay -S gpu-screen-recorder-git`).\

|

If you are running an Arch Linux based distro, then you can find gpu screen recorder on aur under the name gpu-screen-recorder-git (`yay -S gpu-screen-recorder-git`).\

|

||||||

If you are running an Ubuntu based distro then run `install_ubuntu.sh` as root: `sudo ./install_ubuntu.sh`. You also need to install the `libnvidia-compute` version that fits your nvidia driver to install libcuda.so to run gpu-screen-recorder. But it's recommended that you use the flatpak version of gpu-screen-recorder if you use an older version of ubuntu as the ffmpeg version will be old and wont support the best quality options.\

|

If you are running an Ubuntu based distro then run `install_ubuntu.sh` as root: `sudo ./install_ubuntu.sh`. You also need to install the `libnvidia-compute` version that fits your nvidia driver to install libcuda.so to run gpu-screen-recorder and `libnvidia-fbc.so.1` when using nvfbc. But it's recommended that you use the flatpak version of gpu-screen-recorder if you use an older version of ubuntu as the ffmpeg version will be old and wont support the best quality options.\

|

||||||

If you are running another distro then you can run `install.sh` as root: `sudo ./install.sh`, but you need to manually install the dependencies, as described below.\

|

If you are running another distro then you can run `install.sh` as root: `sudo ./install.sh`, but you need to manually install the dependencies, as described below.\

|

||||||

You can also install gpu screen recorder ([the gtk gui version](https://git.dec05eba.com/gpu-screen-recorder-gtk/)) from [flathub](https://flathub.org/apps/details/com.dec05eba.gpu_screen_recorder).

|

You can also install gpu screen recorder ([the gtk gui version](https://git.dec05eba.com/gpu-screen-recorder-gtk/)) from [flathub](https://flathub.org/apps/details/com.dec05eba.gpu_screen_recorder).

|

||||||

|

|

||||||

# Dependencies

|

# Dependencies

|

||||||

`libgl (libglvnd), ffmpeg, libx11, libxcomposite, libxrandr, libpulse`. You need to additionally have `libcuda.so` installed when you run `gpu-screen-recorder`.\

|

`libgl (and libegl) (libglvnd), ffmpeg, libx11, libxcomposite, libpulse`. You need to additionally have `libcuda.so` installed when you run `gpu-screen-recorder` and `libnvidia-fbc.so.1` when using nvfbc.\

|

||||||

Recording monitors requires a gpu with NvFBC support (note: this is not required when recording a single window!). Normally only tesla and quadro gpus support this, but by using [nvidia-patch](https://github.com/keylase/nvidia-patch) or [nvlax](https://github.com/illnyang/nvlax) you can do this on all gpus that support nvenc as well (gpus as old as the nvidia 600 series), provided you are not using outdated gpu drivers.

|

Recording monitors requires a gpu with NvFBC support (note: this is not required when recording a single window!). Normally only tesla and quadro gpus support this, but by using [nvidia-patch](https://github.com/keylase/nvidia-patch) or [nvlax](https://github.com/illnyang/nvlax) you can do this on all gpus that support nvenc as well (gpus as old as the nvidia 600 series), provided you are not using outdated gpu drivers.

|

||||||

|

|

||||||

# How to use

|

# How to use

|

||||||

@@ -55,8 +54,6 @@ The plugin does everything on the GPU and gives the texture to OBS, but OBS does

|

|||||||

FFMPEG only uses the GPU with CUDA when doing transcoding from an input video to an output video, and not when recording the screen when using x11grab. So FFMPEG has the same fps drop issues that OBS has.

|

FFMPEG only uses the GPU with CUDA when doing transcoding from an input video to an output video, and not when recording the screen when using x11grab. So FFMPEG has the same fps drop issues that OBS has.

|

||||||

|

|

||||||

# TODO

|

# TODO

|

||||||

* Support AMD and Intel, using VAAPI.

|

|

||||||

libraries at compile-time.

|

|

||||||

* Dynamically change bitrate/resolution to match desired fps. This would be helpful when streaming for example, where the encode output speed also depends on upload speed to the streaming service.

|

* Dynamically change bitrate/resolution to match desired fps. This would be helpful when streaming for example, where the encode output speed also depends on upload speed to the streaming service.

|

||||||

* Show cursor when recording. Currently the cursor is not visible when recording a window.

|

* Show cursor when recording. Currently the cursor is not visible when recording a window.

|

||||||

* Implement opengl injection to capture texture. This fixes composition issues and (VRR) without having to use NvFBC direct capture.

|

* Implement opengl injection to capture texture. This fixes composition issues and (VRR) without having to use NvFBC direct capture.

|

||||||

|

|||||||

4

TODO

4

TODO

@@ -1,7 +1,6 @@

|

|||||||

Check for reparent.

|

Check for reparent.

|

||||||

Only add window to list if its the window is a topmost window.

|

Only add window to list if its the window is a topmost window.

|

||||||

Track window damages and only update then. That is better for output file size.

|

Track window damages and only update then. That is better for output file size.

|

||||||

Getting the texture of a window when using a compositor is an nvidia specific limitation. When gpu-screen-recorder supports other gpus then this can be ignored.

|

|

||||||

Quickly changing workspace and back while recording under i3 breaks the screen recorder. i3 probably unmaps windows in other workspaces.

|

Quickly changing workspace and back while recording under i3 breaks the screen recorder. i3 probably unmaps windows in other workspaces.

|

||||||

See https://trac.ffmpeg.org/wiki/EncodingForStreamingSites for optimizing streaming.

|

See https://trac.ffmpeg.org/wiki/EncodingForStreamingSites for optimizing streaming.

|

||||||

Add option to merge audio tracks into one (muxing?) by adding multiple audio streams in one -a arg separated by comma.

|

Add option to merge audio tracks into one (muxing?) by adding multiple audio streams in one -a arg separated by comma.

|

||||||

@@ -13,5 +12,6 @@ Allow recording a region by recording the compositor proxy window / nvfbc window

|

|||||||

Resizing the target window to be smaller than the initial size is buggy. The window texture ends up duplicated in the video.

|

Resizing the target window to be smaller than the initial size is buggy. The window texture ends up duplicated in the video.

|

||||||

Handle frames (especially for applications with rounded client-side decorations, such as gnome applications. They are huge).

|

Handle frames (especially for applications with rounded client-side decorations, such as gnome applications. They are huge).

|

||||||

Use nvenc directly, which allows removing the use of cuda.

|

Use nvenc directly, which allows removing the use of cuda.

|

||||||

Fallback to nvfbc and window tracking if window capture fails.

|

|

||||||

Handle xrandr monitor change in nvfbc.

|

Handle xrandr monitor change in nvfbc.

|

||||||

|

Add option to track the focused window. In that case the video size should dynamically change (change frame resolution) to match the window size and it should update when the window resizes.

|

||||||

|

Add option for 4:4:4 chroma sampling for the output video.

|

||||||

8

build.sh

8

build.sh

@@ -1,16 +1,18 @@

|

|||||||

#!/bin/sh -e

|

#!/bin/sh -e

|

||||||

|

|

||||||

|

#libdrm

|

||||||

dependencies="libavcodec libavformat libavutil x11 xcomposite xrandr libpulse libswresample"

|

dependencies="libavcodec libavformat libavutil x11 xcomposite xrandr libpulse libswresample"

|

||||||

includes="$(pkg-config --cflags $dependencies)"

|

includes="$(pkg-config --cflags $dependencies)"

|

||||||

libs="$(pkg-config --libs $dependencies) -ldl -pthread -lm"

|

libs="$(pkg-config --libs $dependencies) -ldl -pthread -lm"

|

||||||

gcc -c src/capture/capture.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/capture/capture.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/capture/nvfbc.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/capture/nvfbc.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/capture/xcomposite.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/capture/xcomposite_cuda.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/gl.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/capture/xcomposite_drm.c -O2 -g0 -DNDEBUG $includes

|

||||||

|

gcc -c src/egl.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/cuda.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/cuda.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/window_texture.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/window_texture.c -O2 -g0 -DNDEBUG $includes

|

||||||

gcc -c src/time.c -O2 -g0 -DNDEBUG $includes

|

gcc -c src/time.c -O2 -g0 -DNDEBUG $includes

|

||||||

g++ -c src/sound.cpp -O2 -g0 -DNDEBUG $includes

|

g++ -c src/sound.cpp -O2 -g0 -DNDEBUG $includes

|

||||||

g++ -c src/main.cpp -O2 -g0 -DNDEBUG $includes

|

g++ -c src/main.cpp -O2 -g0 -DNDEBUG $includes

|

||||||

g++ -o gpu-screen-recorder -O2 capture.o nvfbc.o gl.o cuda.o window_texture.o time.o xcomposite.o sound.o main.o -s $libs

|

g++ -o gpu-screen-recorder -O2 capture.o nvfbc.o egl.o cuda.o window_texture.o time.o xcomposite_cuda.o xcomposite_drm.o sound.o main.o -s $libs

|

||||||

echo "Successfully built gpu-screen-recorder"

|

echo "Successfully built gpu-screen-recorder"

|

||||||

@@ -1,16 +0,0 @@

|

|||||||

#ifndef GSR_CAPTURE_XCOMPOSITE_H

|

|

||||||

#define GSR_CAPTURE_XCOMPOSITE_H

|

|

||||||

|

|

||||||

#include "capture.h"

|

|

||||||

#include "../vec2.h"

|

|

||||||

#include <X11/X.h>

|

|

||||||

|

|

||||||

typedef struct _XDisplay Display;

|

|

||||||

|

|

||||||

typedef struct {

|

|

||||||

Window window;

|

|

||||||

} gsr_capture_xcomposite_params;

|

|

||||||

|

|

||||||

gsr_capture* gsr_capture_xcomposite_create(const gsr_capture_xcomposite_params *params);

|

|

||||||

|

|

||||||

#endif /* GSR_CAPTURE_XCOMPOSITE_H */

|

|

||||||

16

include/capture/xcomposite_cuda.h

Normal file

16

include/capture/xcomposite_cuda.h

Normal file

@@ -0,0 +1,16 @@

|

|||||||

|

#ifndef GSR_CAPTURE_XCOMPOSITE_CUDA_H

|

||||||

|

#define GSR_CAPTURE_XCOMPOSITE_CUDA_H

|

||||||

|

|

||||||

|

#include "capture.h"

|

||||||

|

#include "../vec2.h"

|

||||||

|

#include <X11/X.h>

|

||||||

|

|

||||||

|

typedef struct _XDisplay Display;

|

||||||

|

|

||||||

|

typedef struct {

|

||||||

|

Window window;

|

||||||

|

} gsr_capture_xcomposite_cuda_params;

|

||||||

|

|

||||||

|

gsr_capture* gsr_capture_xcomposite_cuda_create(const gsr_capture_xcomposite_cuda_params *params);

|

||||||

|

|

||||||

|

#endif /* GSR_CAPTURE_XCOMPOSITE_CUDA_H */

|

||||||

16

include/capture/xcomposite_drm.h

Normal file

16

include/capture/xcomposite_drm.h

Normal file

@@ -0,0 +1,16 @@

|

|||||||

|

#ifndef GSR_CAPTURE_XCOMPOSITE_DRM_H

|

||||||

|

#define GSR_CAPTURE_XCOMPOSITE_DRM_H

|

||||||

|

|

||||||

|

#include "capture.h"

|

||||||

|

#include "../vec2.h"

|

||||||

|

#include <X11/X.h>

|

||||||

|

|

||||||

|

typedef struct _XDisplay Display;

|

||||||

|

|

||||||

|

typedef struct {

|

||||||

|

Window window;

|

||||||

|

} gsr_capture_xcomposite_drm_params;

|

||||||

|

|

||||||

|

gsr_capture* gsr_capture_xcomposite_drm_create(const gsr_capture_xcomposite_drm_params *params);

|

||||||

|

|

||||||

|

#endif /* GSR_CAPTURE_XCOMPOSITE_DRM_H */

|

||||||

170

include/egl.h

Normal file

170

include/egl.h

Normal file

@@ -0,0 +1,170 @@

|

|||||||

|

#ifndef GSR_EGL_H

|

||||||

|

#define GSR_EGL_H

|

||||||

|

|

||||||

|

/* OpenGL EGL library with a hidden window context (to allow using the opengl functions) */

|

||||||

|

|

||||||

|

#include <X11/X.h>

|

||||||

|

#include <X11/Xutil.h>

|

||||||

|

#include <stdbool.h>

|

||||||

|

#include <stdint.h>

|

||||||

|

|

||||||

|

#ifdef _WIN64

|

||||||

|

typedef signed long long int khronos_intptr_t;

|

||||||

|

typedef unsigned long long int khronos_uintptr_t;

|

||||||

|

typedef signed long long int khronos_ssize_t;

|

||||||

|

typedef unsigned long long int khronos_usize_t;

|

||||||

|

#else

|

||||||

|

typedef signed long int khronos_intptr_t;

|

||||||

|

typedef unsigned long int khronos_uintptr_t;

|

||||||

|

typedef signed long int khronos_ssize_t;

|

||||||

|

typedef unsigned long int khronos_usize_t;

|

||||||

|

#endif

|

||||||

|

|

||||||

|

typedef void* EGLDisplay;

|

||||||

|

typedef void* EGLNativeDisplayType;

|

||||||

|

typedef uintptr_t EGLNativeWindowType;

|

||||||

|

typedef uintptr_t EGLNativePixmapType;

|

||||||

|

typedef void* EGLConfig;

|

||||||

|

typedef void* EGLSurface;

|

||||||

|

typedef void* EGLContext;

|

||||||

|

typedef void* EGLClientBuffer;

|

||||||

|

typedef void* EGLImage;

|

||||||

|

typedef void* EGLImageKHR;

|

||||||

|

typedef void *GLeglImageOES;

|

||||||

|

typedef void (*__eglMustCastToProperFunctionPointerType)(void);

|

||||||

|

|

||||||

|

#define EGL_BUFFER_SIZE 0x3020

|

||||||

|

#define EGL_RENDERABLE_TYPE 0x3040

|

||||||

|

#define EGL_OPENGL_ES2_BIT 0x0004

|

||||||

|

#define EGL_NONE 0x3038

|

||||||

|

#define EGL_CONTEXT_CLIENT_VERSION 0x3098

|

||||||

|

#define EGL_BACK_BUFFER 0x3084

|

||||||

|

|

||||||

|

#define GL_TEXTURE_2D 0x0DE1

|

||||||

|

#define GL_RGB 0x1907

|

||||||

|

#define GL_UNSIGNED_BYTE 0x1401

|

||||||

|

#define GL_COLOR_BUFFER_BIT 0x00004000

|

||||||

|

#define GL_TEXTURE_WRAP_S 0x2802

|

||||||

|

#define GL_TEXTURE_WRAP_T 0x2803

|

||||||

|

#define GL_TEXTURE_MAG_FILTER 0x2800

|

||||||

|

#define GL_TEXTURE_MIN_FILTER 0x2801

|

||||||

|

#define GL_TEXTURE_WIDTH 0x1000

|

||||||

|

#define GL_TEXTURE_HEIGHT 0x1001

|

||||||

|

#define GL_NEAREST 0x2600

|

||||||

|

#define GL_CLAMP_TO_EDGE 0x812F

|

||||||

|

#define GL_LINEAR 0x2601

|

||||||

|

#define GL_FRAMEBUFFER 0x8D40

|

||||||

|

#define GL_COLOR_ATTACHMENT0 0x8CE0

|

||||||

|

#define GL_FRAMEBUFFER_COMPLETE 0x8CD5

|

||||||

|

#define GL_STATIC_DRAW 0x88E4

|

||||||

|

#define GL_ARRAY_BUFFER 0x8892

|

||||||

|

|

||||||

|

#define GL_VENDOR 0x1F00

|

||||||

|

#define GL_RENDERER 0x1F01

|

||||||

|

|

||||||

|

#define GLX_BUFFER_SIZE 2

|

||||||

|

#define GLX_DOUBLEBUFFER 5

|

||||||

|

#define GLX_RED_SIZE 8

|

||||||

|

#define GLX_GREEN_SIZE 9

|

||||||

|

#define GLX_BLUE_SIZE 10

|

||||||

|

#define GLX_ALPHA_SIZE 11

|

||||||

|

#define GLX_DEPTH_SIZE 12

|

||||||

|

|

||||||

|

#define GLX_RGBA_BIT 0x00000001

|

||||||

|

#define GLX_RENDER_TYPE 0x8011

|

||||||

|

#define GLX_FRONT_EXT 0x20DE

|

||||||

|

#define GLX_BIND_TO_TEXTURE_RGB_EXT 0x20D0

|

||||||

|

#define GLX_DRAWABLE_TYPE 0x8010

|

||||||

|

#define GLX_WINDOW_BIT 0x00000001

|

||||||

|

#define GLX_PIXMAP_BIT 0x00000002

|

||||||

|

#define GLX_BIND_TO_TEXTURE_TARGETS_EXT 0x20D3

|

||||||

|

#define GLX_TEXTURE_2D_BIT_EXT 0x00000002

|

||||||

|

#define GLX_TEXTURE_TARGET_EXT 0x20D6

|

||||||

|

#define GLX_TEXTURE_2D_EXT 0x20DC

|

||||||

|

#define GLX_TEXTURE_FORMAT_EXT 0x20D5

|

||||||

|

#define GLX_TEXTURE_FORMAT_RGB_EXT 0x20D9

|

||||||

|

#define GLX_CONTEXT_FORWARD_COMPATIBLE_BIT_ARB 0x00000002

|

||||||

|

#define GLX_CONTEXT_MAJOR_VERSION_ARB 0x2091

|

||||||

|

#define GLX_CONTEXT_MINOR_VERSION_ARB 0x2092

|

||||||

|

#define GLX_CONTEXT_FLAGS_ARB 0x2094

|

||||||

|

|

||||||

|

typedef struct {

|

||||||

|

void *egl_library;

|

||||||

|

void *gl_library;

|

||||||

|

Display *dpy;

|

||||||

|

EGLDisplay egl_display;

|

||||||

|

EGLSurface egl_surface;

|

||||||

|

EGLContext egl_context;

|

||||||

|

Window window;

|

||||||

|

|

||||||

|

EGLDisplay (*eglGetDisplay)(EGLNativeDisplayType display_id);

|

||||||

|

unsigned int (*eglInitialize)(EGLDisplay dpy, int32_t *major, int32_t *minor);

|

||||||

|

unsigned int (*eglChooseConfig)(EGLDisplay dpy, const int32_t *attrib_list, EGLConfig *configs, int32_t config_size, int32_t *num_config);

|

||||||

|

EGLSurface (*eglCreateWindowSurface)(EGLDisplay dpy, EGLConfig config, EGLNativeWindowType win, const int32_t *attrib_list);

|

||||||

|

EGLContext (*eglCreateContext)(EGLDisplay dpy, EGLConfig config, EGLContext share_context, const int32_t *attrib_list);

|

||||||

|

unsigned int (*eglMakeCurrent)(EGLDisplay dpy, EGLSurface draw, EGLSurface read, EGLContext ctx);

|

||||||

|

EGLSurface (*eglCreatePixmapSurface)(EGLDisplay dpy, EGLConfig config, EGLNativePixmapType pixmap, const int32_t *attrib_list);

|

||||||

|

EGLImage (*eglCreateImage)(EGLDisplay dpy, EGLContext ctx, unsigned int target, EGLClientBuffer buffer, const intptr_t *attrib_list);

|

||||||

|

unsigned int (*eglBindTexImage)(EGLDisplay dpy, EGLSurface surface, int32_t buffer);

|

||||||

|

unsigned int (*eglSwapInterval)(EGLDisplay dpy, int32_t interval);

|

||||||

|

unsigned int (*eglSwapBuffers)(EGLDisplay dpy, EGLSurface surface);

|

||||||

|

__eglMustCastToProperFunctionPointerType (*eglGetProcAddress)(const char *procname);

|

||||||

|

|

||||||

|

unsigned int (*eglExportDMABUFImageQueryMESA)(EGLDisplay dpy, EGLImageKHR image, int *fourcc, int *num_planes, uint64_t *modifiers);

|

||||||

|

unsigned int (*eglExportDMABUFImageMESA)(EGLDisplay dpy, EGLImageKHR image, int *fds, int32_t *strides, int32_t *offsets);

|

||||||

|

void (*glEGLImageTargetTexture2DOES)(unsigned int target, GLeglImageOES image);

|

||||||

|

|

||||||

|

unsigned int (*glGetError)(void);

|

||||||

|

const unsigned char* (*glGetString)(unsigned int name);

|

||||||

|

void (*glClear)(unsigned int mask);

|

||||||

|

void (*glClearColor)(float red, float green, float blue, float alpha);

|

||||||

|

void (*glGenTextures)(int n, unsigned int *textures);

|

||||||

|

void (*glDeleteTextures)(int n, const unsigned int *texture);

|

||||||

|

void (*glBindTexture)(unsigned int target, unsigned int texture);

|

||||||

|

void (*glTexParameteri)(unsigned int target, unsigned int pname, int param);

|

||||||

|

void (*glGetTexLevelParameteriv)(unsigned int target, int level, unsigned int pname, int *params);

|

||||||

|

void (*glTexImage2D)(unsigned int target, int level, int internalFormat, int width, int height, int border, unsigned int format, unsigned int type, const void *pixels);

|

||||||

|

void (*glCopyImageSubData)(unsigned int srcName, unsigned int srcTarget, int srcLevel, int srcX, int srcY, int srcZ, unsigned int dstName, unsigned int dstTarget, int dstLevel, int dstX, int dstY, int dstZ, int srcWidth, int srcHeight, int srcDepth);

|

||||||

|

void (*glGenFramebuffers)(int n, unsigned int *framebuffers);

|

||||||

|

void (*glBindFramebuffer)(unsigned int target, unsigned int framebuffer);

|

||||||

|

void (*glViewport)(int x, int y, int width, int height);

|

||||||

|

void (*glFramebufferTexture2D)(unsigned int target, unsigned int attachment, unsigned int textarget, unsigned int texture, int level);

|

||||||

|

void (*glDrawBuffers)(int n, const unsigned int *bufs);

|

||||||

|

unsigned int (*glCheckFramebufferStatus)(unsigned int target);

|

||||||

|

void (*glBindBuffer)(unsigned int target, unsigned int buffer);

|

||||||

|

void (*glGenBuffers)(int n, unsigned int *buffers);

|

||||||

|

void (*glBufferData)(unsigned int target, khronos_ssize_t size, const void *data, unsigned int usage);

|

||||||

|

int (*glGetUniformLocation)(unsigned int program, const char *name);

|

||||||

|

void (*glGenVertexArrays)(int n, unsigned int *arrays);

|

||||||

|

void (*glBindVertexArray)(unsigned int array);

|

||||||

|

|

||||||

|

unsigned int (*glCreateProgram)(void);

|

||||||

|

unsigned int (*glCreateShader)(unsigned int type);

|

||||||

|

void (*glAttachShader)(unsigned int program, unsigned int shader);

|

||||||

|

void (*glBindAttribLocation)(unsigned int program, unsigned int index, const char *name);

|

||||||

|

void (*glCompileShader)(unsigned int shader);

|

||||||

|

void (*glLinkProgram)(unsigned int program);

|

||||||

|

void (*glShaderSource)(unsigned int shader, int count, const char *const*string, const int *length);

|

||||||

|

void (*glUseProgram)(unsigned int program);

|

||||||

|

void (*glGetProgramInfoLog)(unsigned int program, int bufSize, int *length, char *infoLog);

|

||||||

|

void (*glGetShaderiv)(unsigned int shader, unsigned int pname, int *params);

|

||||||

|

void (*glGetShaderInfoLog)(unsigned int shader, int bufSize, int *length, char *infoLog);

|

||||||

|

void (*glGetShaderSource)(unsigned int shader, int bufSize, int *length, char *source);

|

||||||

|

void (*glDeleteProgram)(unsigned int program);

|

||||||

|

void (*glDeleteShader)(unsigned int shader);

|

||||||

|

void (*glGetProgramiv)(unsigned int program, unsigned int pname, int *params);

|

||||||

|

void (*glVertexAttribPointer)(unsigned int index, int size, unsigned int type, unsigned char normalized, int stride, const void *pointer);

|

||||||

|

void (*glEnableVertexAttribArray)(unsigned int index);

|

||||||

|

void (*glDrawArrays)(unsigned int mode, int first, int count );

|

||||||

|

void (*glReadBuffer)( unsigned int mode );

|

||||||

|

void (*glReadPixels)(int x, int y,

|

||||||

|

int width, int height,

|

||||||

|

unsigned int format, unsigned int type,

|

||||||

|

void *pixels );

|

||||||

|

} gsr_egl;

|

||||||

|

|

||||||

|

bool gsr_egl_load(gsr_egl *self, Display *dpy);

|

||||||

|

bool gsr_egl_make_context_current(gsr_egl *self);

|

||||||

|

void gsr_egl_unload(gsr_egl *self);

|

||||||

|

|

||||||

|

#endif /* GSR_EGL_H */

|

||||||

102

include/gl.h

102

include/gl.h

@@ -1,102 +0,0 @@

|

|||||||

#ifndef GSR_GL_H

|

|

||||||

#define GSR_GL_H

|

|

||||||

|

|

||||||

/* OpenGL library with a hidden window context (to allow using the opengl functions) */

|

|

||||||

|

|

||||||

#include <X11/X.h>

|

|

||||||

#include <X11/Xutil.h>

|

|

||||||

#include <stdbool.h>

|

|

||||||

|

|

||||||

typedef XID GLXPixmap;

|

|

||||||

typedef XID GLXDrawable;

|

|

||||||

typedef XID GLXWindow;

|

|

||||||

|

|

||||||

typedef struct __GLXcontextRec *GLXContext;

|

|

||||||

typedef struct __GLXFBConfigRec *GLXFBConfig;

|

|

||||||

|

|

||||||

#define GL_TEXTURE_2D 0x0DE1

|

|

||||||

#define GL_RGB 0x1907

|

|

||||||

#define GL_UNSIGNED_BYTE 0x1401

|

|

||||||

#define GL_COLOR_BUFFER_BIT 0x00004000

|

|

||||||

#define GL_TEXTURE_WRAP_S 0x2802

|

|

||||||

#define GL_TEXTURE_WRAP_T 0x2803

|

|

||||||

#define GL_TEXTURE_MAG_FILTER 0x2800

|

|

||||||

#define GL_TEXTURE_MIN_FILTER 0x2801

|

|

||||||

#define GL_TEXTURE_WIDTH 0x1000

|

|

||||||

#define GL_TEXTURE_HEIGHT 0x1001

|

|

||||||

#define GL_NEAREST 0x2600

|

|

||||||

#define GL_CLAMP_TO_EDGE 0x812F

|

|

||||||

#define GL_LINEAR 0x2601

|

|

||||||

|

|

||||||

#define GL_RENDERER 0x1F01

|

|

||||||

|

|

||||||

#define GLX_BUFFER_SIZE 2

|

|

||||||

#define GLX_DOUBLEBUFFER 5

|

|

||||||

#define GLX_RED_SIZE 8

|

|

||||||

#define GLX_GREEN_SIZE 9

|

|

||||||

#define GLX_BLUE_SIZE 10

|

|

||||||

#define GLX_ALPHA_SIZE 11

|

|

||||||

#define GLX_DEPTH_SIZE 12

|

|

||||||

|

|

||||||

#define GLX_RGBA_BIT 0x00000001

|

|

||||||

#define GLX_RENDER_TYPE 0x8011

|

|

||||||

#define GLX_FRONT_EXT 0x20DE

|

|

||||||

#define GLX_BIND_TO_TEXTURE_RGB_EXT 0x20D0

|

|

||||||

#define GLX_DRAWABLE_TYPE 0x8010

|

|

||||||

#define GLX_WINDOW_BIT 0x00000001

|

|

||||||

#define GLX_PIXMAP_BIT 0x00000002

|

|

||||||

#define GLX_BIND_TO_TEXTURE_TARGETS_EXT 0x20D3

|

|

||||||

#define GLX_TEXTURE_2D_BIT_EXT 0x00000002

|

|

||||||

#define GLX_TEXTURE_TARGET_EXT 0x20D6

|

|

||||||

#define GLX_TEXTURE_2D_EXT 0x20DC

|

|

||||||

#define GLX_TEXTURE_FORMAT_EXT 0x20D5

|

|

||||||

#define GLX_TEXTURE_FORMAT_RGB_EXT 0x20D9

|

|

||||||

#define GLX_CONTEXT_FORWARD_COMPATIBLE_BIT_ARB 0x00000002

|

|

||||||

#define GLX_CONTEXT_MAJOR_VERSION_ARB 0x2091

|

|

||||||

#define GLX_CONTEXT_MINOR_VERSION_ARB 0x2092

|

|

||||||

#define GLX_CONTEXT_FLAGS_ARB 0x2094

|

|

||||||

|

|

||||||

typedef struct {

|

|

||||||

void *library;

|

|

||||||

Display *dpy;

|

|

||||||

GLXFBConfig *fbconfigs;

|

|

||||||

XVisualInfo *visual_info;

|

|

||||||

GLXFBConfig fbconfig;

|

|

||||||

Colormap colormap;

|

|

||||||

GLXContext gl_context;

|

|

||||||

Window window;

|

|

||||||

|

|

||||||

GLXPixmap (*glXCreatePixmap)(Display *dpy, GLXFBConfig config, Pixmap pixmap, const int *attribList);

|

|

||||||

void (*glXDestroyPixmap)(Display *dpy, GLXPixmap pixmap);

|

|

||||||

void (*glXBindTexImageEXT)(Display *dpy, GLXDrawable drawable, int buffer, const int *attrib_list);

|

|

||||||

void (*glXReleaseTexImageEXT)(Display *dpy, GLXDrawable drawable, int buffer);

|

|

||||||

GLXFBConfig* (*glXChooseFBConfig)(Display *dpy, int screen, const int *attribList, int *nitems);

|

|

||||||

XVisualInfo* (*glXGetVisualFromFBConfig)(Display *dpy, GLXFBConfig config);

|

|

||||||

GLXContext (*glXCreateContextAttribsARB)(Display *dpy, GLXFBConfig config, GLXContext share_context, Bool direct, const int *attrib_list);

|

|

||||||

Bool (*glXMakeContextCurrent)(Display *dpy, GLXDrawable draw, GLXDrawable read, GLXContext ctx);

|

|

||||||

void (*glXDestroyContext)(Display *dpy, GLXContext ctx);

|

|

||||||

void (*glXSwapBuffers)(Display *dpy, GLXDrawable drawable);

|

|

||||||

|

|

||||||

void (*glXSwapIntervalEXT)(Display *dpy, GLXDrawable drawable, int interval);

|

|

||||||

int (*glXSwapIntervalMESA)(unsigned int interval);

|

|

||||||

int (*glXSwapIntervalSGI)(int interval);

|

|

||||||

|

|

||||||

void (*glClearTexImage)(unsigned int texture, unsigned int level, unsigned int format, unsigned int type, const void *data);

|

|

||||||

|

|

||||||

unsigned int (*glGetError)(void);

|

|

||||||

const unsigned char* (*glGetString)(unsigned int name);

|

|

||||||

void (*glClear)(unsigned int mask);

|

|

||||||

void (*glGenTextures)(int n, unsigned int *textures);

|

|

||||||

void (*glDeleteTextures)(int n, const unsigned int *texture);

|

|

||||||

void (*glBindTexture)(unsigned int target, unsigned int texture);

|

|

||||||

void (*glTexParameteri)(unsigned int target, unsigned int pname, int param);

|

|

||||||

void (*glGetTexLevelParameteriv)(unsigned int target, int level, unsigned int pname, int *params);

|

|

||||||

void (*glTexImage2D)(unsigned int target, int level, int internalFormat, int width, int height, int border, unsigned int format, unsigned int type, const void *pixels);

|

|

||||||

void (*glCopyImageSubData)(unsigned int srcName, unsigned int srcTarget, int srcLevel, int srcX, int srcY, int srcZ, unsigned int dstName, unsigned int dstTarget, int dstLevel, int dstX, int dstY, int dstZ, int srcWidth, int srcHeight, int srcDepth);

|

|

||||||

} gsr_gl;

|

|

||||||

|

|

||||||

bool gsr_gl_load(gsr_gl *self, Display *dpy);

|

|

||||||

bool gsr_gl_make_context_current(gsr_gl *self);

|

|

||||||

void gsr_gl_unload(gsr_gl *self);

|

|

||||||

|

|

||||||

#endif /* GSR_GL_H */

|

|

||||||

@@ -1,20 +1,22 @@

|

|||||||

#ifndef WINDOW_TEXTURE_H

|

#ifndef WINDOW_TEXTURE_H

|

||||||

#define WINDOW_TEXTURE_H

|

#define WINDOW_TEXTURE_H

|

||||||

|

|

||||||

#include "gl.h"

|

#include "egl.h"

|

||||||

|

|

||||||

typedef struct {

|

typedef struct {

|

||||||

Display *display;

|

Display *display;

|

||||||

Window window;

|

Window window;

|

||||||

Pixmap pixmap;

|

Pixmap pixmap;

|

||||||

GLXPixmap glx_pixmap;

|

|

||||||

unsigned int texture_id;

|

unsigned int texture_id;

|

||||||

|

unsigned int target_texture_id;

|

||||||

|

int texture_width;

|

||||||

|

int texture_height;

|

||||||

int redirected;

|

int redirected;

|

||||||

gsr_gl *gl;

|

gsr_egl *egl;

|

||||||

} WindowTexture;

|

} WindowTexture;

|

||||||

|

|

||||||

/* Returns 0 on success */

|

/* Returns 0 on success */

|

||||||

int window_texture_init(WindowTexture *window_texture, Display *display, Window window, gsr_gl *gl);

|

int window_texture_init(WindowTexture *window_texture, Display *display, Window window, gsr_egl *egl);

|

||||||

void window_texture_deinit(WindowTexture *self);

|

void window_texture_deinit(WindowTexture *self);

|

||||||

|

|

||||||

/*

|

/*

|

||||||

|

|||||||

@@ -13,3 +13,4 @@ xcomposite = ">=0.2"

|

|||||||

xrandr = ">=1"

|

xrandr = ">=1"

|

||||||

libpulse = ">=13"

|

libpulse = ">=13"

|

||||||

libswresample = ">=3"

|

libswresample = ">=3"

|

||||||

|

#libdrm = ">=2"

|

||||||

|

|||||||

@@ -22,6 +22,7 @@ typedef struct {

|

|||||||

bool fbc_handle_created;

|

bool fbc_handle_created;

|

||||||

|

|

||||||

gsr_cuda cuda;

|

gsr_cuda cuda;

|

||||||

|

bool frame_initialized;

|

||||||

} gsr_capture_nvfbc;

|

} gsr_capture_nvfbc;

|

||||||

|

|

||||||

#if defined(_WIN64) || defined(__LP64__)

|

#if defined(_WIN64) || defined(__LP64__)

|

||||||

@@ -52,28 +53,45 @@ static uint32_t get_output_id_from_display_name(NVFBC_RANDR_OUTPUT_INFO *outputs

|

|||||||

}

|

}

|

||||||

|

|

||||||

/* TODO: Test with optimus and open kernel modules */

|

/* TODO: Test with optimus and open kernel modules */

|

||||||

static bool driver_supports_direct_capture_cursor() {

|

static bool get_driver_version(int *major, int *minor) {

|

||||||

|

*major = 0;

|

||||||

|

*minor = 0;

|

||||||

|

|

||||||

FILE *f = fopen("/proc/driver/nvidia/version", "rb");

|

FILE *f = fopen("/proc/driver/nvidia/version", "rb");

|

||||||

if(!f)

|

if(!f) {

|

||||||

|

fprintf(stderr, "gsr warning: failed to get nvidia driver version (failed to read /proc/driver/nvidia/version)\n");

|

||||||

return false;

|

return false;

|

||||||

|

}

|

||||||

|

|

||||||

char buffer[2048];

|

char buffer[2048];

|

||||||

size_t bytes_read = fread(buffer, 1, sizeof(buffer) - 1, f);

|

size_t bytes_read = fread(buffer, 1, sizeof(buffer) - 1, f);

|

||||||

buffer[bytes_read] = '\0';

|

buffer[bytes_read] = '\0';

|

||||||

|

|

||||||

bool supports_cursor = false;

|

bool success = false;

|

||||||

const char *p = strstr(buffer, "Kernel Module");

|

const char *p = strstr(buffer, "Kernel Module");

|

||||||

if(p) {

|

if(p) {

|

||||||

p += 13;

|

p += 13;

|

||||||

int driver_major_version = 0, driver_minor_version = 0;

|

int driver_major_version = 0, driver_minor_version = 0;

|

||||||

if(sscanf(p, "%d.%d", &driver_major_version, &driver_minor_version) == 2) {

|

if(sscanf(p, "%d.%d", &driver_major_version, &driver_minor_version) == 2) {

|

||||||

if(driver_major_version > 515 || (driver_major_version == 515 && driver_minor_version >= 57))

|

*major = driver_major_version;

|

||||||

supports_cursor = true;

|

*minor = driver_minor_version;

|

||||||

|

success = true;

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

|

if(!success)

|

||||||

|

fprintf(stderr, "gsr warning: failed to get nvidia driver version\n");

|

||||||

|

|

||||||

fclose(f);

|

fclose(f);

|

||||||

return supports_cursor;

|

return success;

|

||||||

|

}

|

||||||

|

|

||||||

|

static bool version_at_least(int major, int minor, int expected_major, int expected_minor) {

|

||||||

|

return major > expected_major || (major == expected_major && minor >= expected_minor);

|

||||||

|

}

|

||||||

|

|

||||||

|

static bool version_less_than(int major, int minor, int expected_major, int expected_minor) {

|

||||||

|

return major < expected_major || (major == expected_major && minor < expected_minor);

|

||||||

}

|

}

|

||||||

|

|

||||||

static bool gsr_capture_nvfbc_load_library(gsr_capture *cap) {

|

static bool gsr_capture_nvfbc_load_library(gsr_capture *cap) {

|

||||||

@@ -180,6 +198,30 @@ static int gsr_capture_nvfbc_start(gsr_capture *cap, AVCodecContext *video_codec

|

|||||||

|

|

||||||

const bool capture_region = (x > 0 || y > 0 || width > 0 || height > 0);

|

const bool capture_region = (x > 0 || y > 0 || width > 0 || height > 0);

|

||||||

|

|

||||||

|

bool supports_direct_cursor = false;

|

||||||

|

bool direct_capture = cap_nvfbc->params.direct_capture;

|

||||||

|

int driver_major_version = 0;

|

||||||

|

int driver_minor_version = 0;

|

||||||

|

if(direct_capture && get_driver_version(&driver_major_version, &driver_minor_version)) {

|

||||||

|

fprintf(stderr, "Info: detected nvidia version: %d.%d\n", driver_major_version, driver_minor_version);

|

||||||

|

|

||||||

|

if(version_at_least(driver_major_version, driver_minor_version, 515, 57) && version_less_than(driver_major_version, driver_minor_version, 520, 56)) {

|

||||||

|

direct_capture = false;

|

||||||

|

fprintf(stderr, "Warning: \"screen-direct\" has temporary been disabled as it causes stuttering with driver versions >= 515.57 and < 520.56. Please update your driver if possible. Capturing \"screen\" instead.\n");

|

||||||

|

}

|

||||||

|

|

||||||

|

// TODO:

|

||||||

|

// Cursor capture disabled because moving the cursor doesn't update capture rate to monitor hz and instead captures at 10-30 hz

|

||||||

|

/*

|

||||||

|

if(direct_capture) {

|

||||||

|

if(version_at_least(driver_major_version, driver_minor_version, 515, 57))

|

||||||

|

supports_direct_cursor = true;

|

||||||

|

else

|

||||||

|

fprintf(stderr, "Info: capturing \"screen-direct\" but driver version appears to be less than 515.57. Disabling capture of cursor. Please update your driver if you want to capture your cursor or record \"screen\" instead.\n");

|

||||||

|

}

|

||||||

|

*/

|

||||||

|

}

|

||||||

|

|

||||||

NVFBCSTATUS status;

|

NVFBCSTATUS status;

|

||||||

NVFBC_TRACKING_TYPE tracking_type;

|

NVFBC_TRACKING_TYPE tracking_type;

|

||||||

bool capture_session_created = false;

|

bool capture_session_created = false;

|

||||||

@@ -245,11 +287,11 @@ static int gsr_capture_nvfbc_start(gsr_capture *cap, AVCodecContext *video_codec

|

|||||||

memset(&create_capture_params, 0, sizeof(create_capture_params));

|

memset(&create_capture_params, 0, sizeof(create_capture_params));

|

||||||

create_capture_params.dwVersion = NVFBC_CREATE_CAPTURE_SESSION_PARAMS_VER;

|

create_capture_params.dwVersion = NVFBC_CREATE_CAPTURE_SESSION_PARAMS_VER;

|

||||||

create_capture_params.eCaptureType = NVFBC_CAPTURE_SHARED_CUDA;

|

create_capture_params.eCaptureType = NVFBC_CAPTURE_SHARED_CUDA;

|

||||||

create_capture_params.bWithCursor = (!cap_nvfbc->params.direct_capture || driver_supports_direct_capture_cursor()) ? NVFBC_TRUE : NVFBC_FALSE;

|

create_capture_params.bWithCursor = (!direct_capture || supports_direct_cursor) ? NVFBC_TRUE : NVFBC_FALSE;

|

||||||

if(capture_region)

|

if(capture_region)

|

||||||

create_capture_params.captureBox = (NVFBC_BOX){ x, y, width, height };

|

create_capture_params.captureBox = (NVFBC_BOX){ x, y, width, height };

|

||||||

create_capture_params.eTrackingType = tracking_type;

|

create_capture_params.eTrackingType = tracking_type;

|

||||||

create_capture_params.dwSamplingRateMs = 1000u / (uint32_t)cap_nvfbc->params.fps;

|

create_capture_params.dwSamplingRateMs = 1000u / ((uint32_t)cap_nvfbc->params.fps + 1);

|

||||||

create_capture_params.bAllowDirectCapture = cap_nvfbc->params.direct_capture ? NVFBC_TRUE : NVFBC_FALSE;

|

create_capture_params.bAllowDirectCapture = cap_nvfbc->params.direct_capture ? NVFBC_TRUE : NVFBC_FALSE;

|

||||||

create_capture_params.bPushModel = cap_nvfbc->params.direct_capture ? NVFBC_TRUE : NVFBC_FALSE;

|

create_capture_params.bPushModel = cap_nvfbc->params.direct_capture ? NVFBC_TRUE : NVFBC_FALSE;

|

||||||

if(tracking_type == NVFBC_TRACKING_OUTPUT)

|

if(tracking_type == NVFBC_TRACKING_OUTPUT)

|

||||||

@@ -324,6 +366,16 @@ static void gsr_capture_nvfbc_destroy_session(gsr_capture *cap) {

|

|||||||

cap_nvfbc->nv_fbc_handle = 0;

|

cap_nvfbc->nv_fbc_handle = 0;

|

||||||

}

|

}

|

||||||

|

|

||||||

|

static void gsr_capture_nvfbc_tick(gsr_capture *cap, AVCodecContext *video_codec_context, AVFrame **frame) {

|

||||||

|

gsr_capture_nvfbc *cap_nvfbc = cap->priv;

|

||||||

|

if(!cap_nvfbc->frame_initialized && video_codec_context->hw_frames_ctx) {

|

||||||

|

cap_nvfbc->frame_initialized = true;

|

||||||

|

(*frame)->hw_frames_ctx = video_codec_context->hw_frames_ctx;

|

||||||

|

(*frame)->buf[0] = av_buffer_pool_get(((AVHWFramesContext*)video_codec_context->hw_frames_ctx->data)->pool);

|

||||||

|

(*frame)->extended_data = (*frame)->data;

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

||||||

static int gsr_capture_nvfbc_capture(gsr_capture *cap, AVFrame *frame) {

|

static int gsr_capture_nvfbc_capture(gsr_capture *cap, AVFrame *frame) {

|

||||||

gsr_capture_nvfbc *cap_nvfbc = cap->priv;

|

gsr_capture_nvfbc *cap_nvfbc = cap->priv;

|

||||||

|

|

||||||

@@ -338,6 +390,7 @@ static int gsr_capture_nvfbc_capture(gsr_capture *cap, AVFrame *frame) {

|

|||||||

grab_params.dwFlags = NVFBC_TOCUDA_GRAB_FLAGS_NOWAIT;/* | NVFBC_TOCUDA_GRAB_FLAGS_FORCE_REFRESH;*/

|

grab_params.dwFlags = NVFBC_TOCUDA_GRAB_FLAGS_NOWAIT;/* | NVFBC_TOCUDA_GRAB_FLAGS_FORCE_REFRESH;*/

|

||||||

grab_params.pFrameGrabInfo = &frame_info;

|

grab_params.pFrameGrabInfo = &frame_info;

|

||||||

grab_params.pCUDADeviceBuffer = &cu_device_ptr;

|

grab_params.pCUDADeviceBuffer = &cu_device_ptr;

|

||||||

|

grab_params.dwTimeoutMs = 0;

|

||||||

|

|

||||||

NVFBCSTATUS status = cap_nvfbc->nv_fbc_function_list.nvFBCToCudaGrabFrame(cap_nvfbc->nv_fbc_handle, &grab_params);

|

NVFBCSTATUS status = cap_nvfbc->nv_fbc_function_list.nvFBCToCudaGrabFrame(cap_nvfbc->nv_fbc_handle, &grab_params);

|

||||||

if(status != NVFBC_SUCCESS) {

|

if(status != NVFBC_SUCCESS) {

|

||||||

@@ -406,7 +459,7 @@ gsr_capture* gsr_capture_nvfbc_create(const gsr_capture_nvfbc_params *params) {

|

|||||||

|

|

||||||

*cap = (gsr_capture) {

|

*cap = (gsr_capture) {

|

||||||

.start = gsr_capture_nvfbc_start,

|

.start = gsr_capture_nvfbc_start,

|

||||||

.tick = NULL,

|

.tick = gsr_capture_nvfbc_tick,

|

||||||

.should_stop = NULL,

|

.should_stop = NULL,

|

||||||

.capture = gsr_capture_nvfbc_capture,

|

.capture = gsr_capture_nvfbc_capture,

|

||||||

.destroy = gsr_capture_nvfbc_destroy,

|

.destroy = gsr_capture_nvfbc_destroy,

|

||||||

|

|||||||

@@ -1,5 +1,5 @@

|

|||||||

#include "../../include/capture/xcomposite.h"

|

#include "../../include/capture/xcomposite_cuda.h"

|

||||||

#include "../../include/gl.h"

|

#include "../../include/egl.h"

|

||||||

#include "../../include/cuda.h"

|

#include "../../include/cuda.h"

|

||||||

#include "../../include/window_texture.h"

|

#include "../../include/window_texture.h"

|

||||||

#include "../../include/time.h"

|

#include "../../include/time.h"

|

||||||

@@ -9,10 +9,8 @@

|

|||||||

#include <libavutil/frame.h>

|

#include <libavutil/frame.h>

|

||||||

#include <libavcodec/avcodec.h>

|

#include <libavcodec/avcodec.h>

|

||||||

|

|

||||||

/* TODO: Proper error checks and cleanups */

|

|

||||||

|

|

||||||

typedef struct {

|

typedef struct {

|

||||||

gsr_capture_xcomposite_params params;

|

gsr_capture_xcomposite_cuda_params params;

|

||||||

Display *dpy;

|

Display *dpy;

|

||||||

XEvent xev;

|

XEvent xev;

|

||||||

bool should_stop;

|

bool should_stop;

|

||||||

@@ -32,9 +30,9 @@ typedef struct {

|

|||||||

CUgraphicsResource cuda_graphics_resource;

|

CUgraphicsResource cuda_graphics_resource;

|

||||||

CUarray mapped_array;

|

CUarray mapped_array;

|

||||||

|

|

||||||

gsr_gl gl;

|

gsr_egl egl;

|

||||||

gsr_cuda cuda;

|

gsr_cuda cuda;

|

||||||

} gsr_capture_xcomposite;

|

} gsr_capture_xcomposite_cuda;

|

||||||

|

|

||||||

static int max_int(int a, int b) {

|

static int max_int(int a, int b) {

|

||||||

return a > b ? a : b;

|

return a > b ? a : b;

|

||||||

@@ -44,58 +42,9 @@ static int min_int(int a, int b) {

|

|||||||

return a < b ? a : b;

|

return a < b ? a : b;

|

||||||

}

|

}

|

||||||

|

|

||||||

static void gsr_capture_xcomposite_stop(gsr_capture *cap, AVCodecContext *video_codec_context);

|

static void gsr_capture_xcomposite_cuda_stop(gsr_capture *cap, AVCodecContext *video_codec_context);

|

||||||

|

|

||||||

static Window get_compositor_window(Display *display) {

|

static bool cuda_register_opengl_texture(gsr_capture_xcomposite_cuda *cap_xcomp) {

|

||||||

Window overlay_window = XCompositeGetOverlayWindow(display, DefaultRootWindow(display));

|

|

||||||

XCompositeReleaseOverlayWindow(display, DefaultRootWindow(display));

|

|

||||||

|

|

||||||

Window root_window, parent_window;

|

|

||||||

Window *children = NULL;

|

|

||||||

unsigned int num_children = 0;

|

|

||||||

if(XQueryTree(display, overlay_window, &root_window, &parent_window, &children, &num_children) == 0)

|

|

||||||

return None;

|

|

||||||

|

|

||||||

Window compositor_window = None;

|

|

||||||

if(num_children == 1) {

|

|

||||||

compositor_window = children[0];

|

|

||||||

const int screen_width = XWidthOfScreen(DefaultScreenOfDisplay(display));

|

|

||||||

const int screen_height = XHeightOfScreen(DefaultScreenOfDisplay(display));

|

|

||||||

|

|

||||||

XWindowAttributes attr;

|

|

||||||

if(!XGetWindowAttributes(display, compositor_window, &attr) || attr.width != screen_width || attr.height != screen_height)

|

|

||||||

compositor_window = None;

|

|

||||||

}

|

|

||||||

|

|

||||||

if(children)

|

|

||||||

XFree(children);

|

|

||||||

|

|

||||||

return compositor_window;

|

|

||||||

}

|

|

||||||

|

|

||||||

/* TODO: check for glx swap control extension string (GLX_EXT_swap_control, etc) */

|

|

||||||

static void set_vertical_sync_enabled(Display *display, Window window, gsr_gl *gl, bool enabled) {

|

|

||||||

int result = 0;

|

|

||||||

|

|

||||||

if(gl->glXSwapIntervalEXT) {

|

|

||||||

gl->glXSwapIntervalEXT(display, window, enabled ? 1 : 0);

|

|

||||||

} else if(gl->glXSwapIntervalMESA) {

|

|

||||||

result = gl->glXSwapIntervalMESA(enabled ? 1 : 0);

|

|

||||||

} else if(gl->glXSwapIntervalSGI) {

|

|

||||||

result = gl->glXSwapIntervalSGI(enabled ? 1 : 0);

|

|

||||||

} else {

|

|

||||||

static int warned = 0;

|

|

||||||

if (!warned) {

|

|

||||||

warned = 1;

|

|

||||||

fprintf(stderr, "Warning: setting vertical sync not supported\n");

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

if(result != 0)

|

|

||||||

fprintf(stderr, "Warning: setting vertical sync failed\n");

|

|

||||||

}

|

|

||||||

|

|

||||||

static bool cuda_register_opengl_texture(gsr_capture_xcomposite *cap_xcomp) {

|

|

||||||

CUresult res;

|

CUresult res;

|

||||||

CUcontext old_ctx;

|

CUcontext old_ctx;

|

||||||

res = cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

res = cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

||||||

@@ -112,23 +61,22 @@ static bool cuda_register_opengl_texture(gsr_capture_xcomposite *cap_xcomp) {

|

|||||||

return false;

|

return false;

|

||||||

}

|

}

|

||||||

|

|

||||||

/* Get texture */

|

|

||||||

res = cap_xcomp->cuda.cuGraphicsResourceSetMapFlags(cap_xcomp->cuda_graphics_resource, CU_GRAPHICS_MAP_RESOURCE_FLAGS_READ_ONLY);

|

res = cap_xcomp->cuda.cuGraphicsResourceSetMapFlags(cap_xcomp->cuda_graphics_resource, CU_GRAPHICS_MAP_RESOURCE_FLAGS_READ_ONLY);

|

||||||

res = cap_xcomp->cuda.cuGraphicsMapResources(1, &cap_xcomp->cuda_graphics_resource, 0);

|

res = cap_xcomp->cuda.cuGraphicsMapResources(1, &cap_xcomp->cuda_graphics_resource, 0);

|

||||||

|

|

||||||

/* Map texture to cuda array */

|

|

||||||

res = cap_xcomp->cuda.cuGraphicsSubResourceGetMappedArray(&cap_xcomp->mapped_array, cap_xcomp->cuda_graphics_resource, 0, 0);

|

res = cap_xcomp->cuda.cuGraphicsSubResourceGetMappedArray(&cap_xcomp->mapped_array, cap_xcomp->cuda_graphics_resource, 0, 0);

|

||||||

res = cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

res = cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

||||||

return true;

|

return true;

|

||||||

}

|

}

|

||||||

|

|

||||||

static bool cuda_create_codec_context(gsr_capture_xcomposite *cap_xcomp, AVCodecContext *video_codec_context) {

|

static bool cuda_create_codec_context(gsr_capture_xcomposite_cuda *cap_xcomp, AVCodecContext *video_codec_context) {

|

||||||

CUcontext old_ctx;

|

CUcontext old_ctx;

|

||||||

cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

||||||

|

|

||||||

AVBufferRef *device_ctx = av_hwdevice_ctx_alloc(AV_HWDEVICE_TYPE_CUDA);

|

AVBufferRef *device_ctx = av_hwdevice_ctx_alloc(AV_HWDEVICE_TYPE_CUDA);

|

||||||

if(!device_ctx) {

|

if(!device_ctx) {

|

||||||

fprintf(stderr, "Error: Failed to create hardware device context\n");

|

fprintf(stderr, "Error: Failed to create hardware device context\n");

|

||||||

|

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

||||||

return false;

|

return false;

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -173,7 +121,7 @@ static bool cuda_create_codec_context(gsr_capture_xcomposite *cap_xcomp, AVCodec

|

|||||||

return true;

|

return true;

|

||||||

}

|

}

|

||||||

|

|

||||||

static unsigned int gl_create_texture(gsr_capture_xcomposite *cap_xcomp, int width, int height) {

|

static unsigned int gl_create_texture(gsr_capture_xcomposite_cuda *cap_xcomp, int width, int height) {

|

||||||

// Generating this second texture is needed because

|

// Generating this second texture is needed because

|

||||||

// cuGraphicsGLRegisterImage cant be used with the texture that is mapped

|

// cuGraphicsGLRegisterImage cant be used with the texture that is mapped

|

||||||

// directly to the pixmap.

|

// directly to the pixmap.

|

||||||

@@ -182,25 +130,25 @@ static unsigned int gl_create_texture(gsr_capture_xcomposite *cap_xcomp, int wid

|

|||||||

// then needed every frame.

|

// then needed every frame.

|

||||||

// Ignoring failure for now.. TODO: Show proper error

|

// Ignoring failure for now.. TODO: Show proper error

|

||||||

unsigned int texture_id = 0;

|

unsigned int texture_id = 0;

|

||||||

cap_xcomp->gl.glGenTextures(1, &texture_id);

|

cap_xcomp->egl.glGenTextures(1, &texture_id);

|

||||||

cap_xcomp->gl.glBindTexture(GL_TEXTURE_2D, texture_id);

|

cap_xcomp->egl.glBindTexture(GL_TEXTURE_2D, texture_id);

|

||||||

cap_xcomp->gl.glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

|

cap_xcomp->egl.glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, width, height, 0, GL_RGB, GL_UNSIGNED_BYTE, NULL);

|

||||||

|

|

||||||

cap_xcomp->gl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

|

cap_xcomp->egl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

|

||||||

cap_xcomp->gl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

|

cap_xcomp->egl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

|

||||||

cap_xcomp->gl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

|

cap_xcomp->egl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

|

||||||

cap_xcomp->gl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

|

cap_xcomp->egl.glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

|

||||||

|

|

||||||

cap_xcomp->gl.glBindTexture(GL_TEXTURE_2D, 0);

|

cap_xcomp->egl.glBindTexture(GL_TEXTURE_2D, 0);

|

||||||

return texture_id;

|

return texture_id;

|

||||||

}

|

}

|

||||||

|

|

||||||

static int gsr_capture_xcomposite_start(gsr_capture *cap, AVCodecContext *video_codec_context) {

|

static int gsr_capture_xcomposite_cuda_start(gsr_capture *cap, AVCodecContext *video_codec_context) {

|

||||||

gsr_capture_xcomposite *cap_xcomp = cap->priv;

|

gsr_capture_xcomposite_cuda *cap_xcomp = cap->priv;

|

||||||

|

|

||||||

XWindowAttributes attr;

|

XWindowAttributes attr;

|

||||||

if(!XGetWindowAttributes(cap_xcomp->dpy, cap_xcomp->params.window, &attr)) {

|

if(!XGetWindowAttributes(cap_xcomp->dpy, cap_xcomp->params.window, &attr)) {

|

||||||

fprintf(stderr, "gsr error: gsr_capture_xcomposite_start failed: invalid window id: %lu\n", cap_xcomp->params.window);

|

fprintf(stderr, "gsr error: gsr_capture_xcomposite_cuda_start failed: invalid window id: %lu\n", cap_xcomp->params.window);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -211,32 +159,33 @@ static int gsr_capture_xcomposite_start(gsr_capture *cap, AVCodecContext *video_

|

|||||||

|

|

||||||

XSelectInput(cap_xcomp->dpy, cap_xcomp->params.window, StructureNotifyMask | ExposureMask);

|

XSelectInput(cap_xcomp->dpy, cap_xcomp->params.window, StructureNotifyMask | ExposureMask);

|

||||||

|

|

||||||

if(!gsr_gl_load(&cap_xcomp->gl, cap_xcomp->dpy)) {

|

if(!gsr_egl_load(&cap_xcomp->egl, cap_xcomp->dpy)) {

|

||||||

fprintf(stderr, "gsr error: gsr_capture_xcomposite_start: failed to load opengl\n");

|

fprintf(stderr, "gsr error: gsr_capture_xcomposite_cuda_start: failed to load opengl\n");

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

set_vertical_sync_enabled(cap_xcomp->dpy, cap_xcomp->gl.window, &cap_xcomp->gl, false);

|

cap_xcomp->egl.eglSwapInterval(cap_xcomp->egl.egl_display, 0);

|

||||||

if(window_texture_init(&cap_xcomp->window_texture, cap_xcomp->dpy, cap_xcomp->params.window, &cap_xcomp->gl) != 0) {

|

// TODO: Fallback to composite window

|

||||||

fprintf(stderr, "gsr error: gsr_capture_xcomposite_start: failed get window texture for window %ld\n", cap_xcomp->params.window);

|

if(window_texture_init(&cap_xcomp->window_texture, cap_xcomp->dpy, cap_xcomp->params.window, &cap_xcomp->egl) != 0) {

|

||||||

gsr_gl_unload(&cap_xcomp->gl);

|

fprintf(stderr, "gsr error: gsr_capture_xcomposite_cuda_start: failed get window texture for window %ld\n", cap_xcomp->params.window);

|

||||||

|

gsr_egl_unload(&cap_xcomp->egl);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

cap_xcomp->gl.glBindTexture(GL_TEXTURE_2D, window_texture_get_opengl_texture_id(&cap_xcomp->window_texture));

|

cap_xcomp->egl.glBindTexture(GL_TEXTURE_2D, window_texture_get_opengl_texture_id(&cap_xcomp->window_texture));

|

||||||

cap_xcomp->texture_size.x = 0;

|

cap_xcomp->texture_size.x = 0;

|

||||||

cap_xcomp->texture_size.y = 0;

|

cap_xcomp->texture_size.y = 0;

|

||||||

cap_xcomp->gl.glGetTexLevelParameteriv(GL_TEXTURE_2D, 0, GL_TEXTURE_WIDTH, &cap_xcomp->texture_size.x);

|

cap_xcomp->egl.glGetTexLevelParameteriv(GL_TEXTURE_2D, 0, GL_TEXTURE_WIDTH, &cap_xcomp->texture_size.x);

|

||||||

cap_xcomp->gl.glGetTexLevelParameteriv(GL_TEXTURE_2D, 0, GL_TEXTURE_HEIGHT, &cap_xcomp->texture_size.y);

|

cap_xcomp->egl.glGetTexLevelParameteriv(GL_TEXTURE_2D, 0, GL_TEXTURE_HEIGHT, &cap_xcomp->texture_size.y);

|

||||||

cap_xcomp->gl.glBindTexture(GL_TEXTURE_2D, 0);

|

cap_xcomp->egl.glBindTexture(GL_TEXTURE_2D, 0);

|

||||||

|

|

||||||

cap_xcomp->texture_size.x = max_int(2, cap_xcomp->texture_size.x & ~1);

|

cap_xcomp->texture_size.x = max_int(2, cap_xcomp->texture_size.x & ~1);

|

||||||

cap_xcomp->texture_size.y = max_int(2, cap_xcomp->texture_size.y & ~1);

|

cap_xcomp->texture_size.y = max_int(2, cap_xcomp->texture_size.y & ~1);

|

||||||

|

|

||||||

cap_xcomp->target_texture_id = gl_create_texture(cap_xcomp, cap_xcomp->texture_size.x, cap_xcomp->texture_size.y);

|

cap_xcomp->target_texture_id = gl_create_texture(cap_xcomp, cap_xcomp->texture_size.x, cap_xcomp->texture_size.y);

|

||||||

if(cap_xcomp->target_texture_id == 0) {

|

if(cap_xcomp->target_texture_id == 0) {

|

||||||

fprintf(stderr, "gsr error: gsr_capture_xcomposite_start: failed to create opengl texture\n");

|

fprintf(stderr, "gsr error: gsr_capture_xcomposite_cuda_start: failed to create opengl texture\n");

|

||||||

gsr_capture_xcomposite_stop(cap, video_codec_context);

|

gsr_capture_xcomposite_cuda_stop(cap, video_codec_context);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -244,17 +193,17 @@ static int gsr_capture_xcomposite_start(gsr_capture *cap, AVCodecContext *video_

|

|||||||

video_codec_context->height = cap_xcomp->texture_size.y;

|

video_codec_context->height = cap_xcomp->texture_size.y;

|

||||||

|

|

||||||

if(!gsr_cuda_load(&cap_xcomp->cuda)) {

|

if(!gsr_cuda_load(&cap_xcomp->cuda)) {

|

||||||

gsr_capture_xcomposite_stop(cap, video_codec_context);

|

gsr_capture_xcomposite_cuda_stop(cap, video_codec_context);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

if(!cuda_create_codec_context(cap_xcomp, video_codec_context)) {

|

if(!cuda_create_codec_context(cap_xcomp, video_codec_context)) {

|

||||||

gsr_capture_xcomposite_stop(cap, video_codec_context);

|

gsr_capture_xcomposite_cuda_stop(cap, video_codec_context);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

if(!cuda_register_opengl_texture(cap_xcomp)) {

|

if(!cuda_register_opengl_texture(cap_xcomp)) {

|

||||||

gsr_capture_xcomposite_stop(cap, video_codec_context);

|

gsr_capture_xcomposite_cuda_stop(cap, video_codec_context);

|

||||||

return -1;

|

return -1;

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -262,13 +211,13 @@ static int gsr_capture_xcomposite_start(gsr_capture *cap, AVCodecContext *video_

|

|||||||

return 0;

|

return 0;

|

||||||

}

|

}

|

||||||

|

|

||||||

static void gsr_capture_xcomposite_stop(gsr_capture *cap, AVCodecContext *video_codec_context) {

|

static void gsr_capture_xcomposite_cuda_stop(gsr_capture *cap, AVCodecContext *video_codec_context) {

|

||||||

gsr_capture_xcomposite *cap_xcomp = cap->priv;

|

gsr_capture_xcomposite_cuda *cap_xcomp = cap->priv;

|

||||||

|

|

||||||

window_texture_deinit(&cap_xcomp->window_texture);

|

window_texture_deinit(&cap_xcomp->window_texture);

|

||||||

|

|

||||||

if(cap_xcomp->target_texture_id) {

|

if(cap_xcomp->target_texture_id) {

|

||||||

cap_xcomp->gl.glDeleteTextures(1, &cap_xcomp->target_texture_id);

|

cap_xcomp->egl.glDeleteTextures(1, &cap_xcomp->target_texture_id);

|

||||||

cap_xcomp->target_texture_id = 0;

|

cap_xcomp->target_texture_id = 0;

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -280,35 +229,42 @@ static void gsr_capture_xcomposite_stop(gsr_capture *cap, AVCodecContext *video_

|

|||||||

av_buffer_unref(&video_codec_context->hw_device_ctx);

|

av_buffer_unref(&video_codec_context->hw_device_ctx);

|

||||||

av_buffer_unref(&video_codec_context->hw_frames_ctx);

|

av_buffer_unref(&video_codec_context->hw_frames_ctx);

|

||||||

|

|

||||||

|

if(cap_xcomp->cuda.cu_ctx) {

|

||||||

|

CUcontext old_ctx;

|

||||||

|

cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

||||||

|

|

||||||

cap_xcomp->cuda.cuGraphicsUnmapResources(1, &cap_xcomp->cuda_graphics_resource, 0);

|

cap_xcomp->cuda.cuGraphicsUnmapResources(1, &cap_xcomp->cuda_graphics_resource, 0);

|

||||||

cap_xcomp->cuda.cuGraphicsUnregisterResource(cap_xcomp->cuda_graphics_resource);

|

cap_xcomp->cuda.cuGraphicsUnregisterResource(cap_xcomp->cuda_graphics_resource);

|

||||||

|

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

||||||

|

}

|

||||||

gsr_cuda_unload(&cap_xcomp->cuda);

|

gsr_cuda_unload(&cap_xcomp->cuda);

|

||||||

|

|

||||||

gsr_gl_unload(&cap_xcomp->gl);

|

gsr_egl_unload(&cap_xcomp->egl);

|

||||||

if(cap_xcomp->dpy) {

|

if(cap_xcomp->dpy) {

|

||||||

|

// TODO: Why is this crashing?

|

||||||

XCloseDisplay(cap_xcomp->dpy);

|

XCloseDisplay(cap_xcomp->dpy);

|

||||||

cap_xcomp->dpy = NULL;

|

cap_xcomp->dpy = NULL;

|

||||||

}

|

}

|

||||||

}

|

}

|

||||||

|

|

||||||

static void gsr_capture_xcomposite_tick(gsr_capture *cap, AVCodecContext *video_codec_context, AVFrame **frame) {

|

static void gsr_capture_xcomposite_cuda_tick(gsr_capture *cap, AVCodecContext *video_codec_context, AVFrame **frame) {

|

||||||

gsr_capture_xcomposite *cap_xcomp = cap->priv;

|

gsr_capture_xcomposite_cuda *cap_xcomp = cap->priv;

|

||||||

|

|

||||||

cap_xcomp->gl.glClear(GL_COLOR_BUFFER_BIT);

|

cap_xcomp->egl.glClear(GL_COLOR_BUFFER_BIT);

|

||||||

|

|

||||||

if(!cap_xcomp->created_hw_frame) {

|

if(!cap_xcomp->created_hw_frame) {

|

||||||

|

cap_xcomp->created_hw_frame = true;

|

||||||

CUcontext old_ctx;

|

CUcontext old_ctx;

|

||||||

cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

cap_xcomp->cuda.cuCtxPushCurrent_v2(cap_xcomp->cuda.cu_ctx);

|

||||||

|

|

||||||

if(av_hwframe_get_buffer(video_codec_context->hw_frames_ctx, *frame, 0) < 0) {

|

if(av_hwframe_get_buffer(video_codec_context->hw_frames_ctx, *frame, 0) < 0) {

|

||||||

fprintf(stderr, "gsr error: gsr_capture_xcomposite_tick: av_hwframe_get_buffer failed\n");

|

fprintf(stderr, "gsr error: gsr_capture_xcomposite_cuda_tick: av_hwframe_get_buffer failed\n");

|

||||||

cap_xcomp->should_stop = true;

|

cap_xcomp->should_stop = true;

|

||||||

cap_xcomp->stop_is_error = true;

|

cap_xcomp->stop_is_error = true;

|

||||||

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

||||||

return;

|

return;

|

||||||

}

|

}

|

||||||

|

|

||||||

cap_xcomp->created_hw_frame = true;

|

|

||||||

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

cap_xcomp->cuda.cuCtxPopCurrent_v2(&old_ctx);

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -343,25 +299,25 @@ static void gsr_capture_xcomposite_tick(gsr_capture *cap, AVCodecContext *video_

|

|||||||

cap_xcomp->window_resized = false;

|